How many layers to fine-tune?

Model fine-tuning allows you to improve the quality of the pre-trained models with just a fraction of the resources spent on training the original model.

But there is a trade-off between the number of layers you tune and the precision you get.

Using fewer layers allows for faster training with larger batch size, while more layers increase the model's capacity.

Qdrant team run experiments so you could make more educated choices.

Here are some highlights:

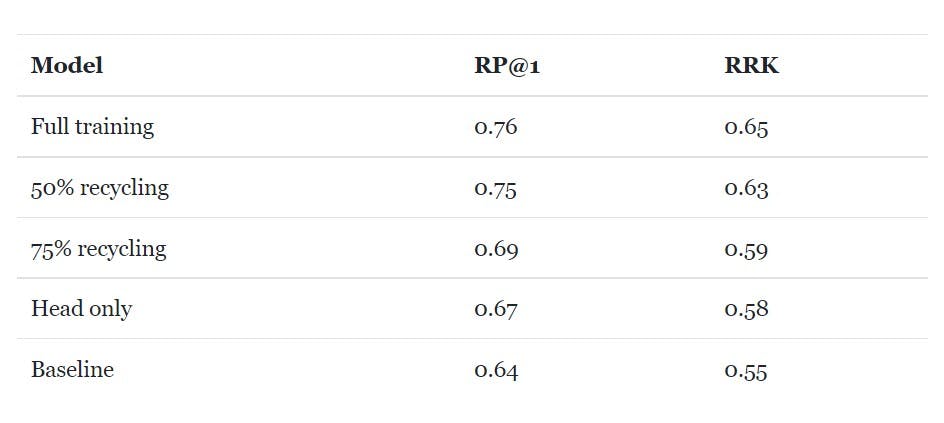

Training only the head of a model (5% of weights) gives 2x boost on metrics, while full training gives only 3x.

Training only a head layer allows using larger models with bigger batch sizes, compensating for the precision.

If you only have a small dataset, full model tuning will give a more negligible effect

Read more in the article qdrant.tech/articles/embedding-recycler by Yusuf Sarıgöz.